In this blog I will create a 3 node Docker swarm cluster and use GlusterFS to share volume storage across Docker swarm nodes.

Introduction

Using Swarm node in Docker will create a cluster of Docker hosts to run container on, the problem in had is if container “A” run in “node1” with named volume “voldata”, all data changes applied to “voldata” will be locally saved to “node1”. If container A is shut down and happen to start again in different node, let’s say this time on “node3” and also mounting named volume “voldata” will be empty and will not contain changes done to the volume when it was mounted in “node1”.

In this example I will not use named volume, rather I will use shared mount storage among cluster nodes, of course the same can apply to share storage for named volume folder.

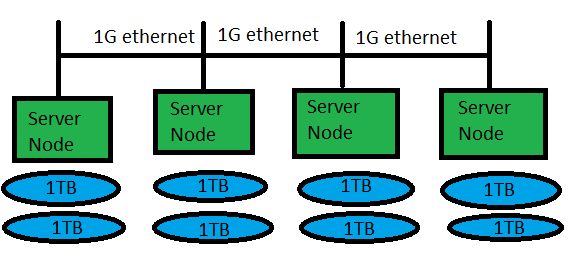

I’m using for this exercise 3 EC2 on AWS with 1 attached EBS volumes for each one of them.

How to get around this?

One of the way to solve this is to use GlusterFS to replicate volumes across swarm nodes and make data available to all nodes at any time. Named volumes will still be local to each Docker host since GlusterFS takes care of the replication.

Preparation on each server

I will use Ubuntu 16.04 for this exercise.

First we put friendly name in /etc/hosts:

XX.XX.XX.XX node1 XX.XX.XX.XX node2 XX.XX.XX.XX node3 |

Then we update the system

$ sudo apt update $ sudo apt upgrade |

Finally we reboot the server. Then start with installing necessary packages on all nodes:

$ sudo apt install -y docker.io $ sudo apt install -y glusterfs-server |

Then start the services:

$ sudo systemctl start glusterfs-server $ sudo systemctl start docker |

Create GlusterFS storage for bricks:

$ sudo mkdir -p /gluster/data /swarm/volumes |

GlusterFS setup

First we prepare filesystem for the Gluster storage on all nodes:

$ sudo mkfs.xfs /dev/xvdb $ sudo mount /dev/xvdb /gluster/data/ |

From node1:

$ sudo gluster peer probe node2 peer probe: success. $ sudo gluster peer probe node3 peer probe: success. |

Create the volume as a mirror:

$ sudo gluster volume create swarm-vols replica 3 node1:/gluster/data node2:/gluster/data node3:/gluster/data force volume create: swarm-vols: success: please start the volume to access data |

Allow mount connection only from localhost:

$ sudo gluster volume set swarm-vols auth.allow 127.0.0.1 volume set: success |

Then start the volume:

$ sudo gluster volume start swarm-vols

volume start: swarm-vols: success |

Then on each Gluster node we mount the shared mirrored GlusterFS locally:

$ sudo mount.glusterfs localhost:/swarm-vols /swarm/volumes |

Docker swarm setup

Here I will create 1 manager node and 2 worker nodes.

$ sudo docker swarm init Swarm initialized: current node (82f5ud4z97q7q74bz9ycwclnd) is now a manager. To add a worker to this swarm, run the following command: docker swarm join \ --token SWMTKN-1-697xeeiei6wsnsr29ult7num899o5febad143ellqx7mt8avwn-1m7wlh59vunohq45x3g075r2h \ 172.31.24.234:2377 To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions. |

Get the token for worker nodes:

$ sudo docker swarm join-token worker To add a worker to this swarm, run the following command: docker swarm join \ --token SWMTKN-1-697xeeiei6wsnsr29ult7num899o5febad143ellqx7mt8avwn-1m7wlh59vunohq45x3g075r2h \ 172.31.24.234:2377 |

Then on both worker nodes:

$ sudo docker swarm join --token SWMTKN-1-697xeeiei6wsnsr29ult7num899o5febad143ellqx7mt8avwn-1m7wlh59vunohq45x3g075r2h 172.31.24.234:2377 This node joined a swarm as a worker. |

Verify the swarm cluster:

$ sudo docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS 6he3dgbanee20h7lul705q196 ip-172-31-27-191 Ready Active 82f5ud4z97q7q74bz9ycwclnd * ip-172-31-24-234 Ready Active Leader c7daeowfoyfua2hy0ueiznbjo ip-172-31-26-52 Ready Active |

Testing

To test, I will create label on node1 and node3, then create a container on node1 then shut it down then create it again on node3, with the same volume mounts, then we will notice that files created by both containers are shared.

Label swarm nodes:

$ sudo docker node update --label-add nodename=node1 ip-172-31-24-234 ip-172-31-24-234 $ sudo docker node update --label-add nodename=node3 ip-172-31-26-52 ip-172-31-26-52 |

Check the labels:

$ sudo docker node inspect --pretty ip-172-31-26-52 ID: c7daeowfoyfua2hy0ueiznbjo Labels: - nodename = node3 Hostname: ip-172-31-26-52 Joined at: 2017-01-06 22:44:17.323236832 +0000 utc Status: State: Ready Availability: Active Platform: Operating System: linux Architecture: x86_64 Resources: CPUs: 1 Memory: 1.952 GiB Plugins: Network: bridge, host, null, overlay Volume: local Engine Version: 1.12.1 |

Create Docker service on node1 that will create a file in the shared volume:

$ sudo docker service create --name testcon --constraint 'node.labels.nodename == node1' --mount type=bind,source=/swarm/volumes/testvol,target=/mnt/testvol /bin/touch /mnt/testvol/testfile1.txt duvqo3btdrrlwf61g3bu5uaom |

Verify service creation:

$ sudo docker service ls ID NAME REPLICAS IMAGE COMMAND duvqo3btdrrl testcon 0/1 busybox /bin/bash |

Check that it’s running in node1:

$ sudo docker service ps testcon ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR 6nw6sm8sak512x24bty7fwxwz testcon.1 ubuntu:latest ip-172-31-24-234 Ready Ready 1 seconds ago 6ctzew4b3rmpkf4barkp1idhx \_ testcon.1 ubuntu:latest ip-172-31-24-234 Shutdown Complete 1 seconds ago |

Also check the volume mounts:

$ sudo docker inspect testcon [ { "ID": "8lnpmwcv56xwmwavu3gc2aay8", "Version": { "Index": 26 }, "CreatedAt": "2017-01-06T23:03:01.93363267Z", "UpdatedAt": "2017-01-06T23:03:01.935557744Z", "Spec": { "ContainerSpec": { "Image": "busybox", "Args": [ "/bin/bash" ], "Mounts": [ { "Type": "bind", "Source": "/swarm/volumes/testvol", "Target": "/mnt/testvol" } ] }, "Resources": { "Limits": {}, "Reservations": {} }, "RestartPolicy": { "Condition": "any", "MaxAttempts": 0 }, "Placement": { "Constraints": [ "nodename == node1" ] } }, "ServiceID": "duvqo3btdrrlwf61g3bu5uaom", "Slot": 1, "Status": { "Timestamp": "2017-01-06T23:03:01.935553276Z", "State": "allocated", "Message": "allocated", "ContainerStatus": {} }, "DesiredState": "running" } ] |

Shutdown the service and then create in node3:

$ sudo docker service create --name testcon --constraint 'node.labels.nodename == node3' --mount type=bind,source=/swarm/volumes/testvol,target=/mnt/testvol ubuntu:latest /bin/touch /mnt/testvol/testfile3.txt 5y99c0bfmc2fywor3lcsvmm9q |

Verify it has ran on node3:

$ sudo docker service ps testcon ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR 5p57xyottput3w34r7fclamd9 testcon.1 ubuntu:latest ip-172-31-26-52 Ready Ready 1 seconds ago aniesakdmrdyuq8m2ddn3ga9b \_ testcon.1 ubuntu:latest ip-172-31-26-52 Shutdown Complete 2 seconds ago |

Now check the files created from both containers exist in the same volume:

$ ls -l /swarm/volumes/testvol/ total 0 -rw-r--r-- 1 root root 0 Jan 6 23:59 testfile3.txt -rw-r--r-- 1 root root 0 Jan 6 23:58 testfile1.txt |